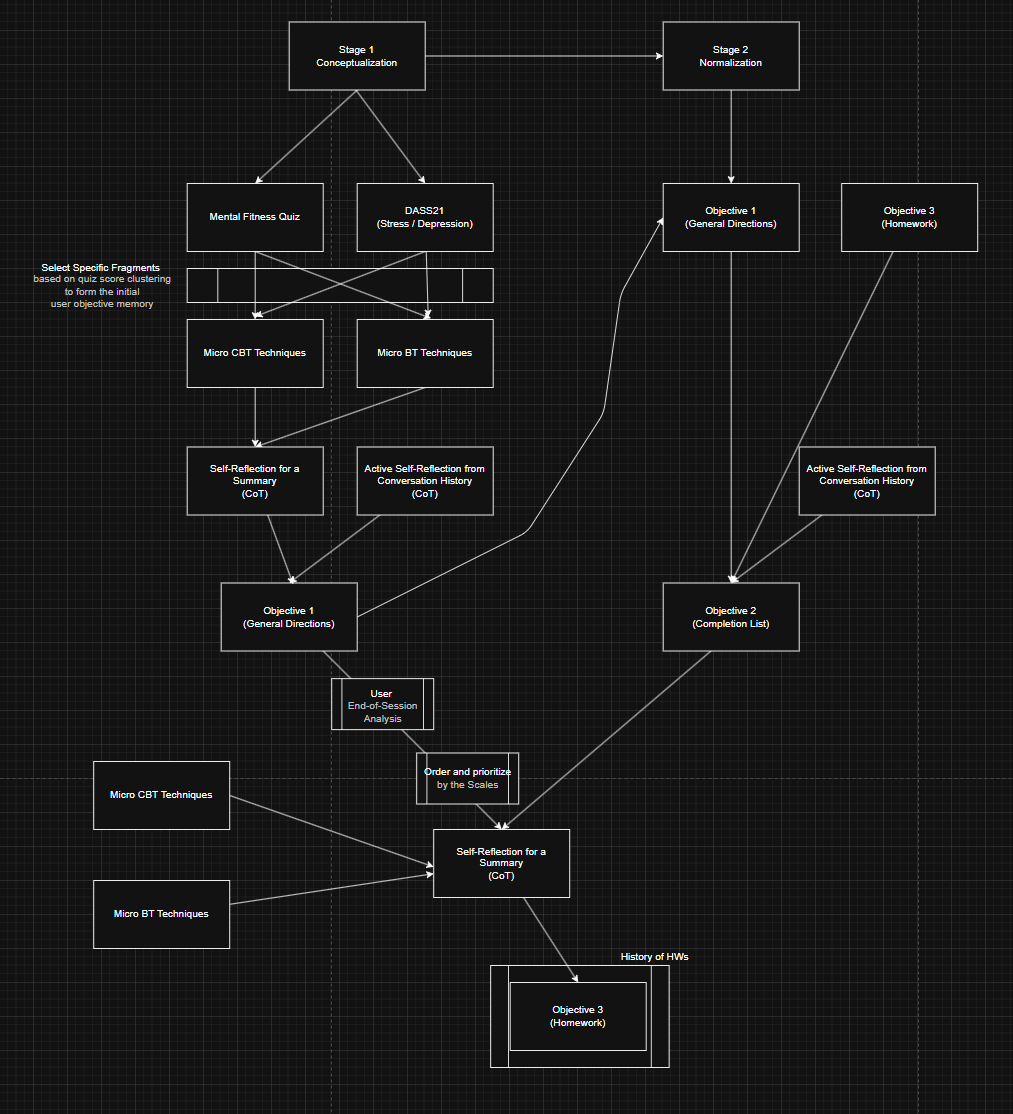

RAG Generation Pipeline for a DE-CBT Therapist Chatbot

In this case study, we demonstrate how a Retrieval-Augmented Generation (RAG) pipeline supports an automated therapy bot based on Darwinian Enhanced Cognitive Behavioral Therapy (DE-CBT) within the ABCDE framework (Activating Events, Beliefs, Consequences, Disputing, and New Emotions/Behaviors). The pipeline employs hybrid semantic embedding methods (BGE-M3), multi-level memory design, and BM25 (for optional lexical ranking), delivering a scalable and personalized experience in mental health interventions.

Why This Matters

- Therapeutic Scale: Demand for mental health care outstrips supply, automated solutions can widen access, especially in an on-demand manner.

- DE-CBT: Rooting maladaptive behaviors in evolutionary mismatch fosters conceptualization and normalization for the user.

- RAG Pipeline: Combining retrieval (for high-relevance content) with large language models (for context-sensitive responses) yields up-to-date, user-tailored therapy content.

Pipeline Overview

Key Components:

- Multi-User, Multi-Chat, Multi-Task: Each user has individualized therapy sessions, stored in segregated namespaces.

- Triple-Memory System:

- Protocol Memory (global DE-CBT & ABCDE resources)

- Objective Memory (user-specific tasks/goals, disputation progress, homework)

- Episodic Memory (per-session transcripts)

- Hybrid Embeddings + BM25: Dense, sparse, ColBERT embeddings + optional lexical rank, fused via Reciprocal Rank Fusion (RRF).

- ABCDE + DE-CBT: Adheres to a stepwise therapy structure, supported by a Darwinian lens to reframe maladaptive behaviors.

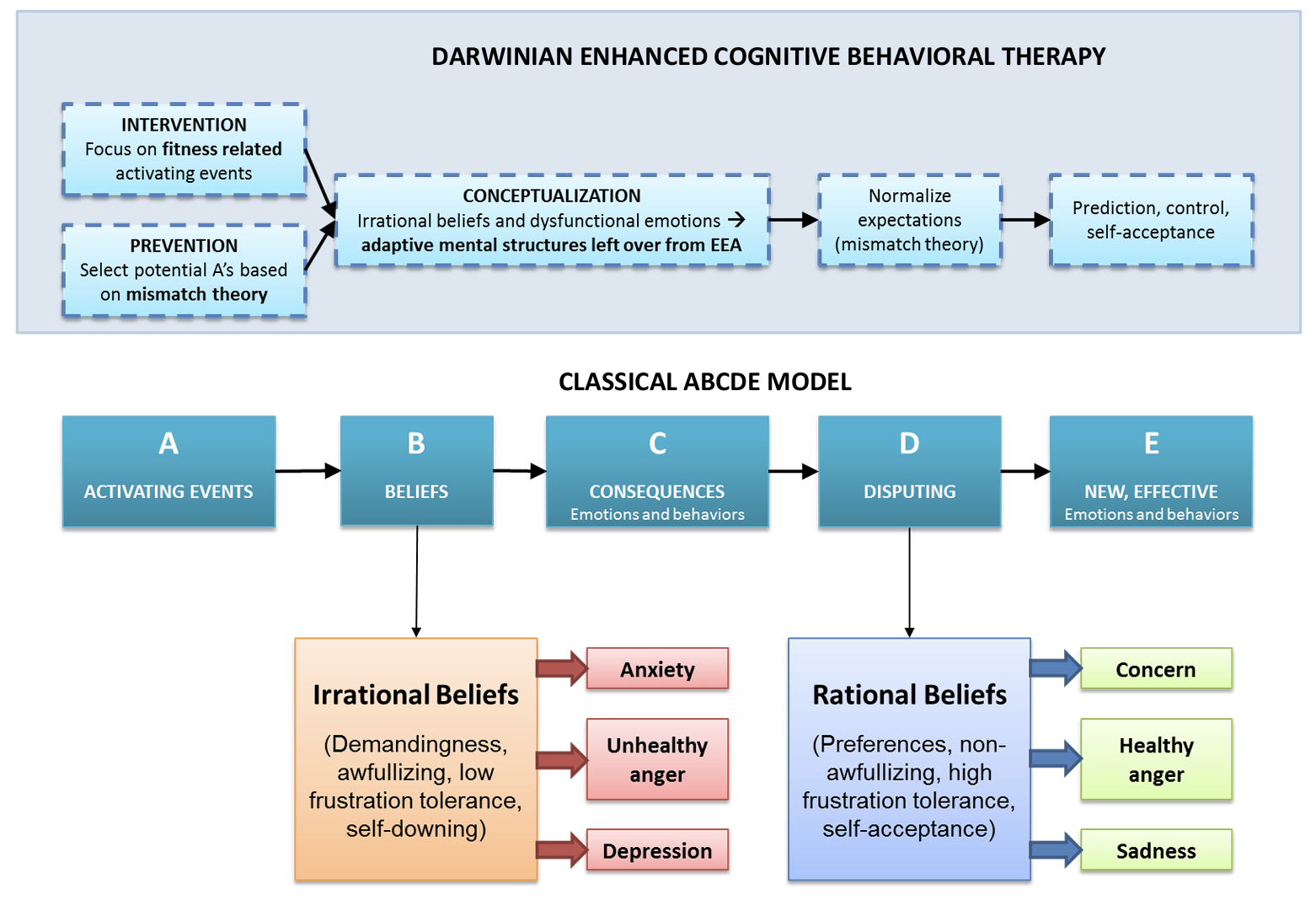

1. DE-CBT Therapy Framework with ABCDE

DE-CBT & ABCDE

- DE-CBT: Integrates evolutionary insights into standard CBT, viewing many maladaptive behaviors as mismatches from once-adaptive ancestral strategies.

- ABCDE: Standard CBT flow—(A) Activating Events, (B) Beliefs, (C) Consequences, (D) Disputing, and (E) New Emotions/Behaviors.

Implementation in Our Bot

- Each conversation follows Activating Events → Beliefs → Consequences → Disputing → New Emotions/Behaviors.

- Protocol memory houses psychoeducational resources and therapy techniques based on the evolutionary mismatch perspective.

This ensures each session proceeds in a standard but personalized manner, leveraging chain-of-thought reflection to adapt future steps.

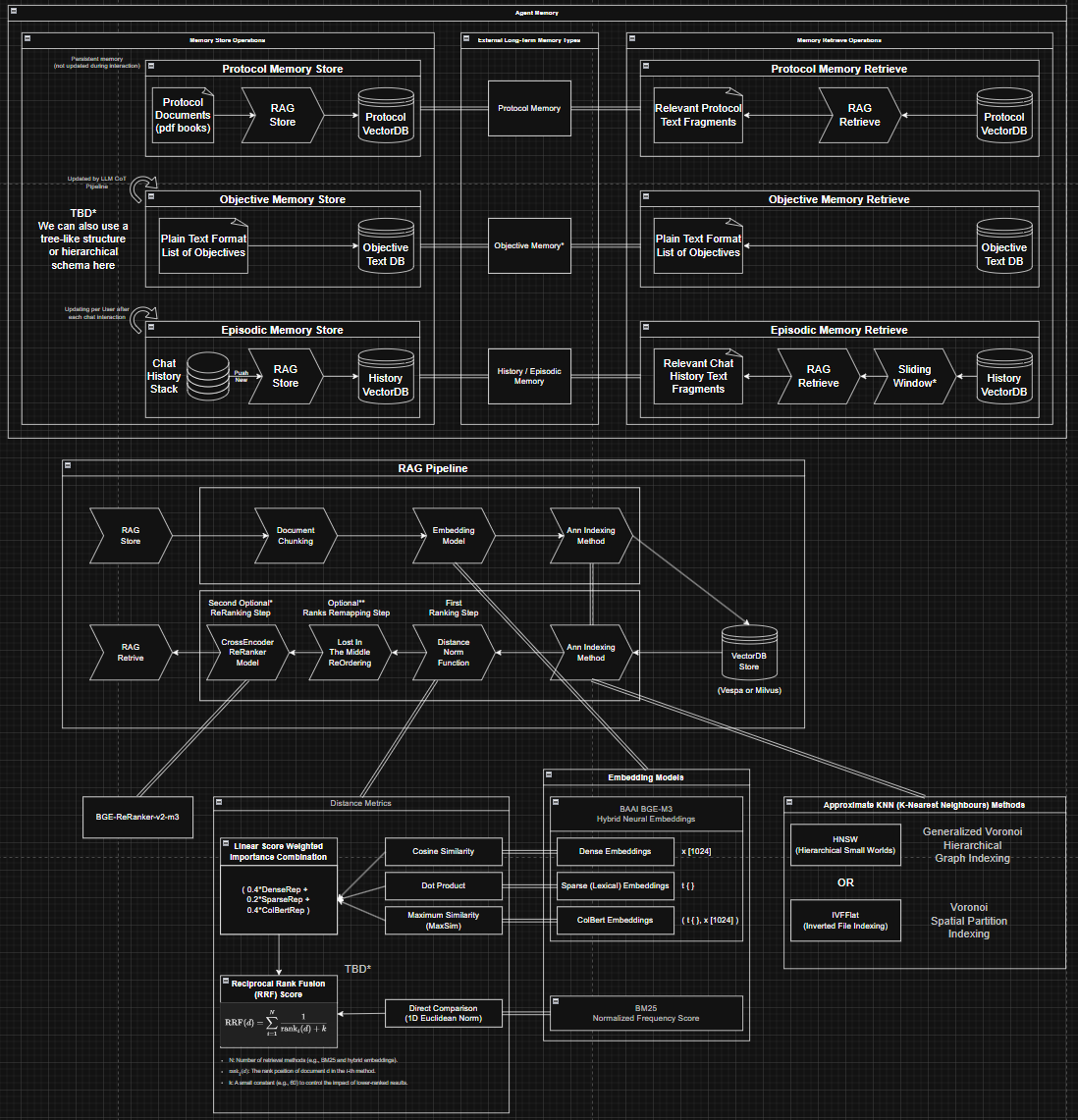

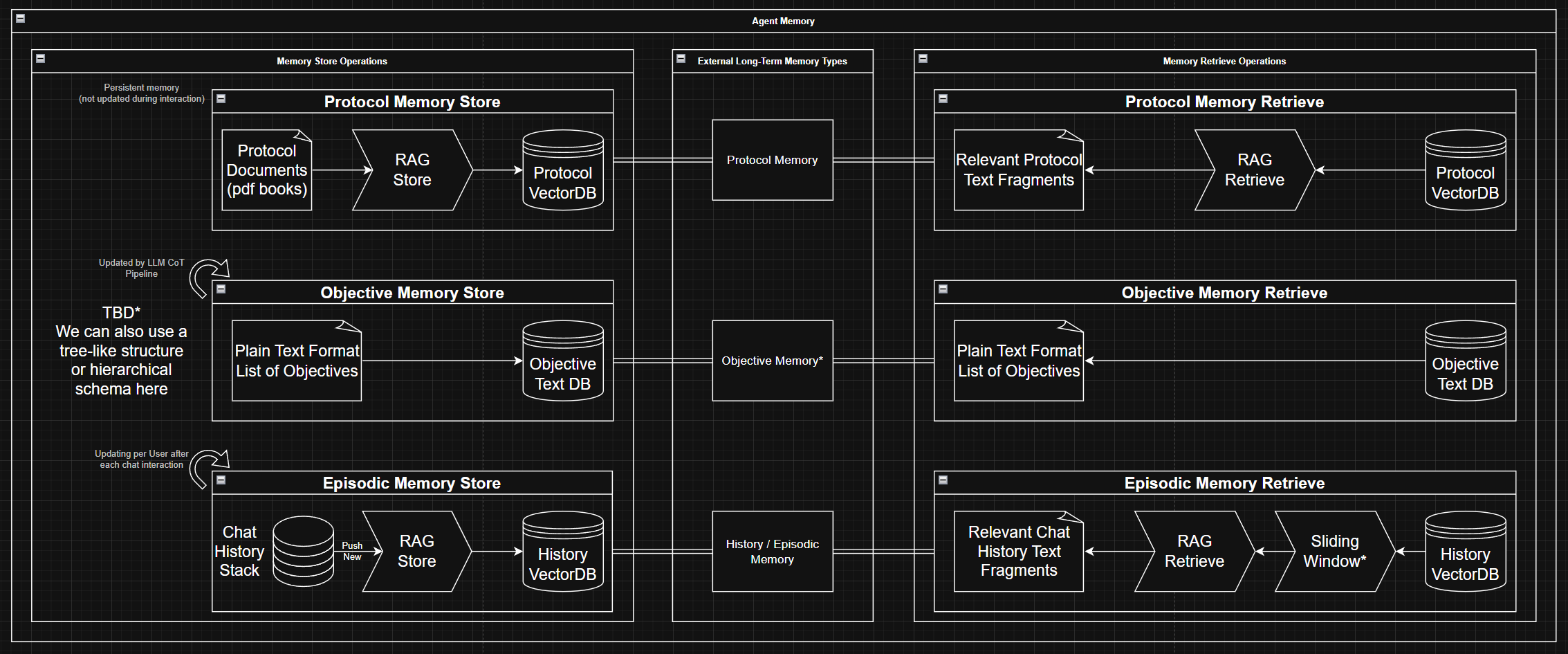

2. Multi-Level Memory Architecture

- Protocol Memory

- Storage of DE-CBT references, ABCDE guidelines, chunked & embedded for quick retrieval.

- Global knowledge base for therapy resources.

- Objective Memory

- General Directions (Objective 1): Summaries of user issues, high-level strategies.

- Completion List (Objective 2): Tracks disputation milestones (when irrational beliefs are challenged).

- Homework (Objective 3): Reinforces new behaviors via tasks assigned at session end.

- Episodic Memory

- Per-session transcripts, embedded for retrieval.

- Updates at each user interaction, enabling continuity from one session to the next.

This triple-memory layout merges global therapy knowledge with dynamic, user-specific states.

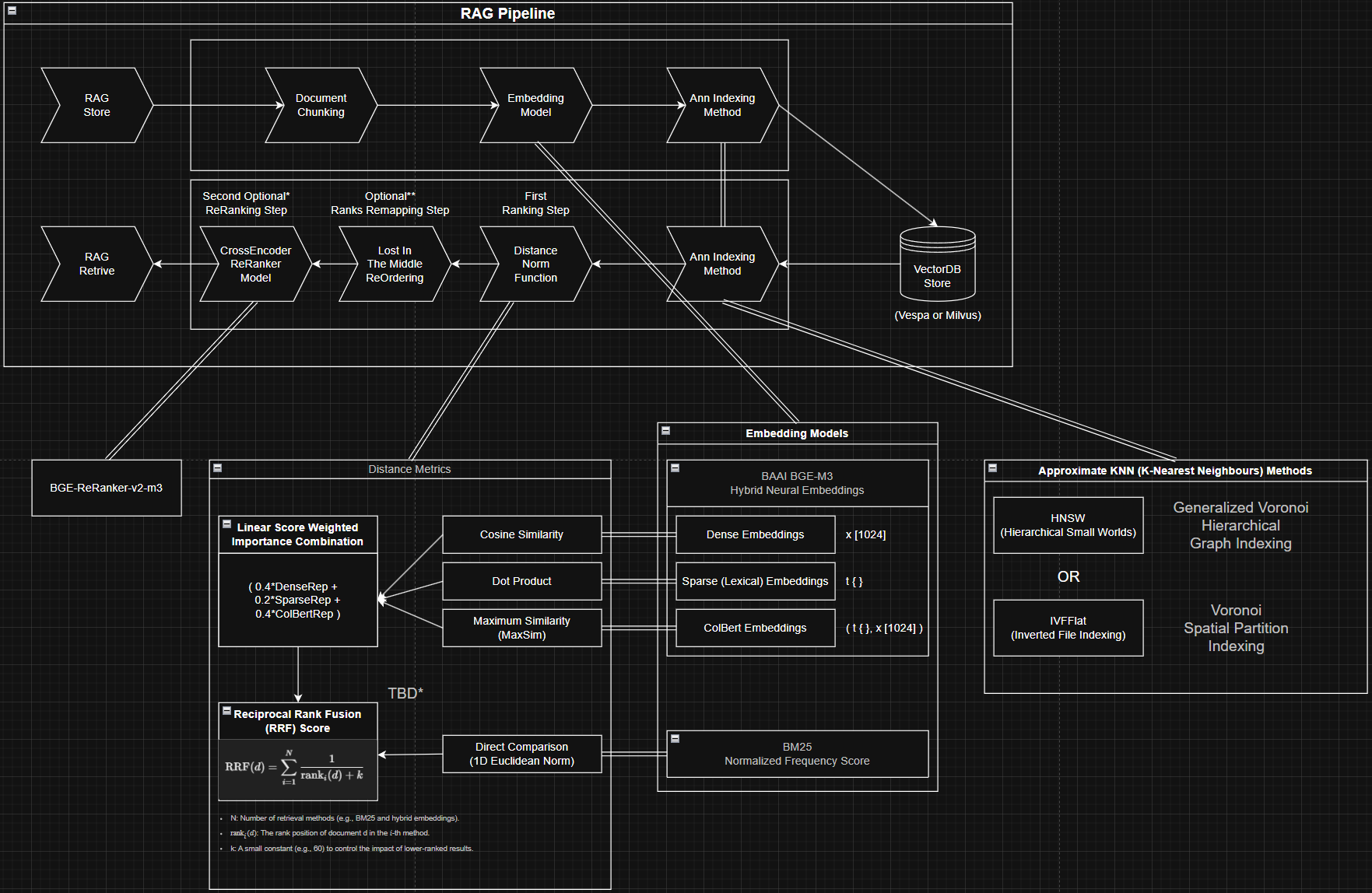

3. Embedding Model: BGE-M3 + BM25

BGE-M3 (Mother of All Embeddings)

- Dense Vectors: Capture semantic context.

- Sparse Vectors: Preserve keyword-level alignment.

- ColBERT: Token-level matching for finer nuance.

All data (protocol texts, user transcripts) passes through this embedding pipeline, and is stored in a Vespa VectorDB. An optional BM25 rank can boost purely lexical matches.

Fusion Ranking

- Initial ANN retrieval from each semantic embedding representation.

- Weighted Fusion: Combine dense, sparse, ColBERT embeddings.

- Reciprocal Rank Fusion (RRF): Integrate the fusion ranking with BM25 results, balancing semantic and lexical signals.

4. Retrieval-Then-Generate Workflow

- Document Chunking: Splits long texts (e.g., CBT manuals, user transcripts) into manageable sizes.

- ANN Search: Finds top-(k) chunks via dense/sparse/ColBERT indexes in Vespa.

- Custom Fusion: Weighted scoring + BM25 → RRF merges final list.

- LLM Generation: The selected chunks feed a large language model, shaping the conversation output with appropriate context.

This ensures each response is anchored by relevant, up-to-date material from the user’s therapy logs and general CBT knowledge.

5. Conversational Flow in ABCDE Stages

- (A) Activating Events

- User describes a triggering situation.

- The pipeline retrieves context from protocol/episodic memory about similar issues.

- (B) Beliefs

- The bot identifies potential irrational beliefs (catastrophizing, black-and-white thinking, etc.).

- Darwinian perspective normalizes these beliefs as once-adaptive but now mismatched.

- (C) Consequences

- Emotional/behavioral outcomes are highlighted.

- The system references psychoeducation to illustrate typical outcomes.

- (D) Disputing

- The system guides the user in challenging these beliefs, pulling best-fit disputation techniques from protocol memory.

- Progress gets recorded in Objective 2 (completion list).

- (E) New Emotions/Behaviors

- Final portion: The bot prescribes homework tasks in Objective 3, ensuring post-session practice.

- These tasks reflect the newly formed or corrected beliefs.

6. Chain-of-Thought Reflection & Session End

Chain-of-Thought

- Dynamically updates Objective 1 (general directions), referencing the user’s evolving goals.

- Compares progress in Objective 2 (disputation milestones) and modifies or finalizes Objective 3 (homework).

Session End Trigger

- Monitors user’s activity or inactivity.

- Provides a session summary, issues or updates homework tasks, then formally concludes the session.

This structure ensures natural stopping points and consistent session flow.

7. Practical Implementation Highlights

- Namespace Isolation: Distinct user data to handle concurrency.

- Assessments & Quizzes: (DASS-21, Mental Fitness Quiz) shape initial therapy directions.

- Darwinian Perspective: Normalization reinterprets maladaptive behaviors as previously adaptive solutions.

8. Data Extraction and Preparation

A core contribution of this pipeline is its reliance on a large curated therapist–patient dataset, derived from audio recordings of real therapy sessions. This dataset underpins domain-specific finetuning, ensuring the chatbot’s style and content mirror authentic therapy exchanges.

- Audio Enhancement

- open-unmix LSTM and MetricGAN: Clean and enhance raw recordings, removing background noise or low-quality artifacts.

- Speech Recognition & Diarization

- Whisper Large v3 Turbo: High-accuracy transcription engine.

- pyannote-based segmentation & clustering: Distinguishes speaker turns, labeling therapist vs. patient segments.

- Curation & Cleaning

- LLM-based pipeline: Filters out unmeaningful utterances, repetitious small talk, filler words.

- Compress unnecessary text while preserving therapy-relevant content (e.g., session transitions, emotional disclosures).

- Behavior Tuning via RLHF & DPO

- The curated dataset feeds PPO RLHF (Proximal Policy Optimization + human feedback) or DPO (Direct Preference Optimization) training, refining the base LLM.

- LoRA Adapter: We finetune a lightweight LoRA adapter that holds these domain-specific therapy patterns, leaving the base LLM intact.

By combining robust audio processing, advanced transcription/diarization, and an LLM-driven data curation pipeline, we assemble a high-quality therapy dataset that powers behavior tuning and domain adaptation for the final chatbot.

9. Results & Observations

- High Personalization: Users experience therapy dialogues that reference their specific issues, tracked across sessions.

- Enhanced Engagement: The chain-of-thought reflection encourages user input, preventing generic or repetitive therapy scripts.

- Therapy Fidelity: Preliminary feedback from mental health professionals indicates strong alignment with standard CBT guidelines, enhanced by a Darwinian lens.

10. Future Directions

- Quiz Integration: Use clustering/inference for more specialized protocol retrieval based on user quiz results.

- Longitudinal Analytics: Track user improvements over multiple sessions to refine therapy strategy dynamically.

- Multi-Agent Collaboration: Delegate different ABCDE steps to specialized agents with individual fine-tunes (psychoeducation agent, disputation agent).

- Support for Emerging LLM Architectures: Latent memory gates (e.g., Meta EWE or Google Titans) or Transformers-Squared, which could reduce reliance on a complex RAG pipeline by storing and recalling knowledge internally, simplifying retrieval.

Conclusion

This DE-CBT therapist chatbot demonstrates how RAG fortified by a hybrid BGE-M3 embedding model, and multi-level memory—can deliver dynamic, evolutionary-informed CBT sessions. The session end trigger ensures coherent closure, with carefully curated homework and continuity in the next session.

As LLM architectures evolve (e.g., Meta EWE, Google Titans or Transformers-Squared with latent memory implementations), the need for external retrieval pipelines may diminish, suggesting a future where advanced models inherently store and leverage therapy knowledge. Nonetheless, current embedding-based retrieval methods provide a robust, scalable approach, ensuring each user receives high-fidelity DE-CBT interventions in real time.